Roofline x ARM: Enhancing software support for ARM SVE in MLIR and IREE

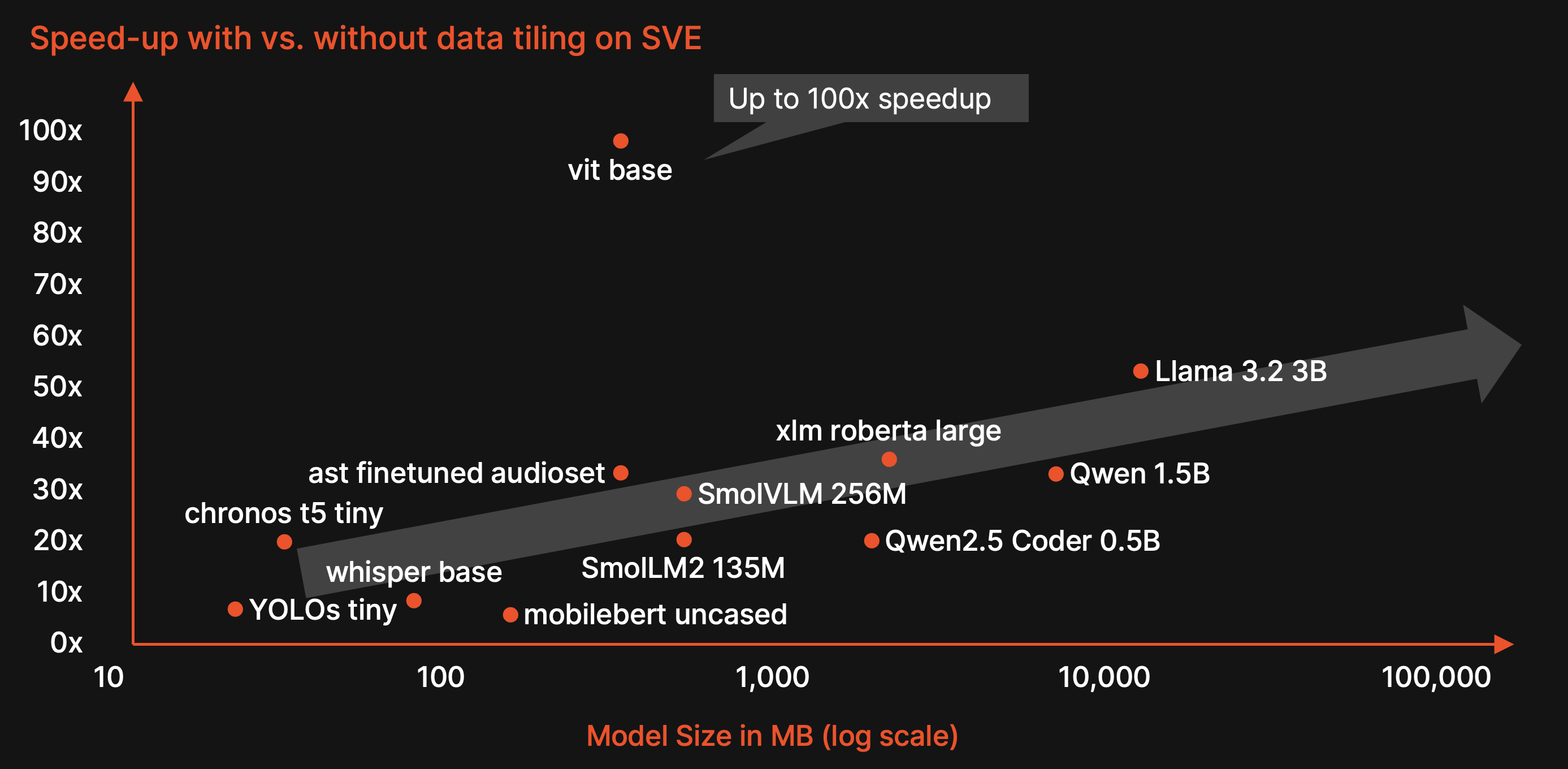

This case study showcases how Roofline and ARM enabled scalable, vector-length-agnostic ML execution on Arm CPUs by implementing data-tiled Scalable Vector Extension (SVE) support end-to-end in IREE, unlocking up to 100× speedups on real models and hardware.

SVE enables scalable SIMD for the next generation of edge ML

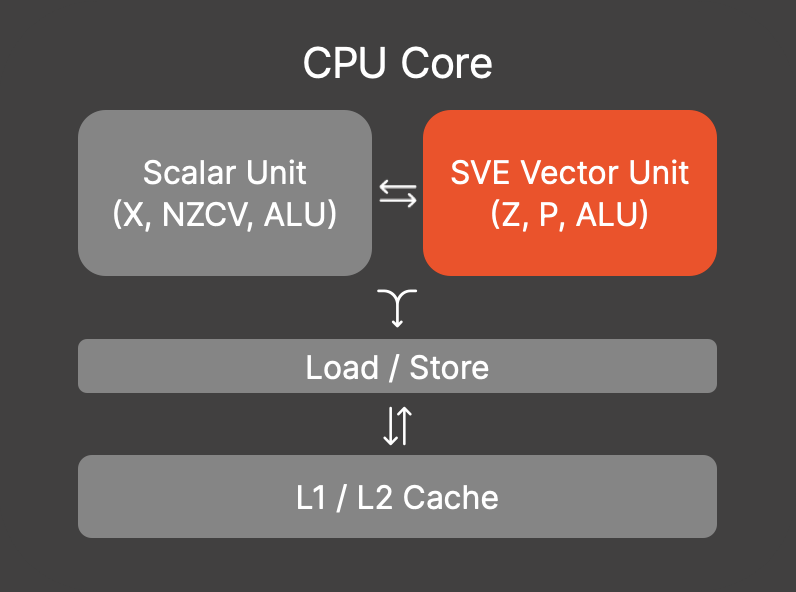

ARM CPUs dominate edge devices, and efficient ML inference on them depends heavily on Single Instruction, Multiple Data (SIMD) vectorization and proper cache utilization. In 2018, ARM introduced the Scalable Vector Extension (SVE) as its next-generation SIMD architecture, increasing the flexibility and longevity of ML software on rapidly evolving hardware.

SVE addresses a key limitation of earlier SIMD designs: fixed vector width. The vector width determines how many data elements a SIMD instruction can process in parallel. Since previous SIMD architectures such as NEON use a fixed 128-bit width, compiled ML models were not portable to newer CPUs and could thus not automatically benefit from wider vectors. SVE solves this by enabling Vector Length Agnostic (VLA) programming, where the vector length is unknown at compile time and discovered at runtime, allowing a single binary to adapt to diverse and evolving hardware. This move towards scalable vectors mirrors a broader industry trend, with architectures like RISC-V RVV following a similar approach.

ML compilers must become scalable too

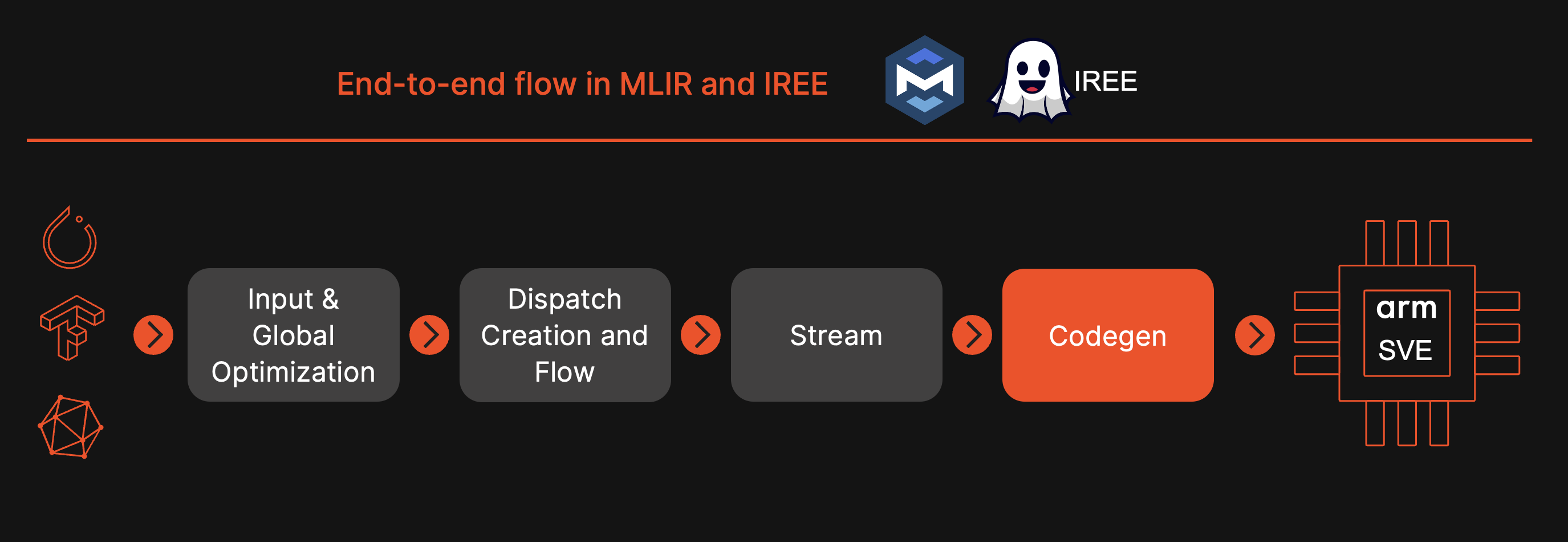

Modern ML workloads are often memory-bound, and compilers rely on data-tiling to break large tensors into small, cache-friendly blocks (“tiles”) to feed SIMD units efficiently. In MLIR and IREE, these tiled layouts were originally designed around NEON’s fixed 128-bit vector width, meaning both tile shapes and memory organization implicitly assume a constant vector size. With SVE’s scalable vectors, these static layouts are no longer suitable: the tile shapes themselves must adapt to the vector length of the underlying CPU, just as SVE instructions do. Supporting SVE in MLIR/IREE therefore requires solving two key challenges:

1. Making data-tiling scalable with respect to vector length

2. Building an end-to-end pipeline capable of lowering full models to SVE.

Our contribution: Making ML compiler infrastructure SVE-ready

To address these challenges, Roofline and Arm collaborated to extend MLIR and IREE with full support for SVE. Our work achieved two major goals:

1. We enabled scalable data-tiling that adapts to SVE’s vector length

2. We built a complete end-to-end pipeline capable of lowering real ML models to efficient SVE code.

To validate these contributions, we compiled 13 unmodified ML models end-to-end into SVE-enabled, data-tiled CPU executables using Roofline’s compiler stack. See the results section for the full evaluation.

Technically, we introduced scalable data-tiled layouts by rewriting linalg.matmul into linalg.mmt4d with inner tile sizes computed from SVE’s runtime vector length (vscale). We extended IREE’s linalg.pack and linalg.unpack to materialize these layouts, and reworked tiling and fusion so loops align with scalable tiles and pack/unpack ops fuse correctly with surrounding compute, avoiding misaligned accesses and unnecessary buffers even under VLA semantics. We enhanced the Linalg vectorizer to lower scalable mmt4d into vector contractions and ultimately into predicated SVE instructions. We further added SVE-specific low-precision compute paths - BF16 (bfmmla) and INT8 (smmla) - by designing scalable tile shapes and register usage patterns that fit SVE’s execution model. Finally, we integrated all components into IREE’s standard ML pipeline, enabling portable, vector-length-agnostic SVE binaries and fulfilling both the scalable tiling and end-to-end enablement goals.

Results: Achieving up to 100× faster ML inference with scalable SVE

We successfully implemented an end-to-end SVE pipeline in IREE and demonstrated scalable data-tiling across a set of 13 models from different classes, solving both core challenges of enabling SVE execution and vector-length-aware tiling. Using this pipeline, we validated the value of scalable data-tiling: combining data-tiling with SVE delivered up to 100× speedups over non–data-tiled SVE code, with larger models seeing the strongest gains.

Against existing deployment solutions, our scalable FP32 SVE pipeline outperformed IREE’s NEON backend on 11 of 13 models (up to 1.4×) and outperformed ExecuTorch with XNNPack on 8 of 12 models (up to 4.3×), despite running on hardware where NEON and SVE share the same 128-bit vector width. Even with SVE’s added VLA complexity, our results on equal-width hardware show that the compiler handles it seamlessly, with no loss in code quality. We further enabled BF16 (bfmmla) and INT8 (smmla) matmuls under the same scalable data-tiling flow, demonstrating functional end-to-end support for low-precision inference. Overall, the full data-tiled SVE pipeline compiled and executed real models on actual hardware, validating both the correctness and deployability of scalable VLA codegen inside MLIR and IREE.

Beyond SVE: A blueprint for scalable vector codegen

Our work demonstrates that scalable data-tiling for SVE can be integrated cleanly into a real ML compiler, proving that vector-length-agnostic codegen is both practical and highly effective, enabling multi-× speedups without requiring any model or framework changes. The FP32 pipeline already shows strong, stable performance across full models, with minimal overhead from scalability and consistent gains over IREE’s NEON backend and ExecuTorch. We also delivered the first end-to-end support for SVE’s BF16 and INT8 compute paths, establishing a solid foundation for future low-precision optimizations.

Looking ahead, several components will be upstreamed to MLIR and IREE to make scalable SVE support broadly available. Most importantly, the same mechanisms naturally extend to architectures such as Arm SME and RISC-V RVV, positioning this work as a blueprint for scalable vector enablement across upcoming CPU architectures.